Sumo Logic App for Google BigQuery

The Google BigQuery App helps you monitor data and activity in your BigQuery data warehouse. The preconfigured dashboards provide insight into the projects, operations, queries, user management operations, user activities, and billed GBs in BigQuery.

Log Types

The Google BigQuery App uses:

- Google Cloud Audit Logs - Logs events on multiple GCP services, including BigQuery.

Sample Query

_sourceCategory=*gcp* logName resource "type":"bigquery_resource"

| parse regex "\"logName\":\"(?<log_name>[^\"]+)\""

| where log_name matches "projects/*/logs/cloudaudit.googleapis.com%2Factivity"

| json "message.data.resource.labels", "message.data.resource.labels.project_id" as labels, project

| timeslice 1h

| count as operations by _timeslice, project

| transpose row _timeslice column project

Collecting Logs for the Google BigQuery App

This section describes the Sumo pipeline for ingesting logs from Google Cloud Platform (GCP) services, and provides instructions for configuring log collection for the Google BigQuery App.

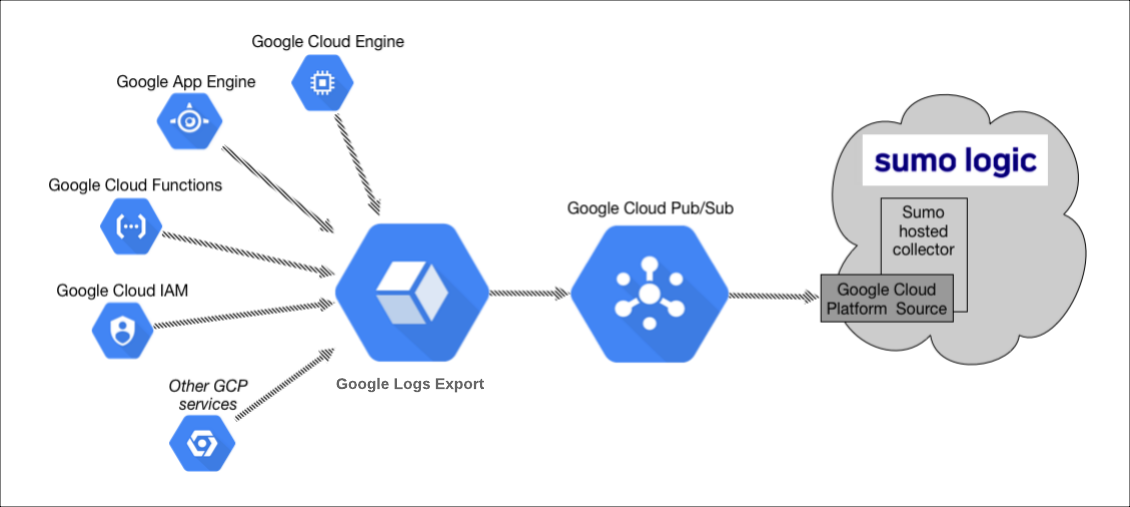

Collection Process for GCP Services

The key components in the collection process for GCP services are Google Logs Export, Google Cloud Pub/Sub, and Sumo’s Google Cloud Platform (GCP) source running on a hosted collector.

The GCP service generates logs which are exported and published to a Google Pub/Sub topic through Stackdriver. You will then set up a Sumo Logic Google Cloud Platform source that subscribes to this topic and receives the exported log data.

Configuring collection for GCP uses the following process:

- Configure a GCP source on a hosted collector. You'll obtain the HTTP URL for the source.

- Create a topic in Google Pub/Sub and subscribe the GCP source URL to that topic.

- Create an export of GCP logs from Google Stackdriver Logging. Exporting involves writing a filter that selects the log entries you want to export, and choosing a Pub/Sub as the destination. The filter and destination are held in an object called a sink.

See the following sections for configuration instructions.

Configure a Google Cloud Platform Source

The Google Cloud Platform (GCP) Source receives log data from Google Pub/Sub.

You can use the same GCP Source to receive log data from multiple GCP services. For example, you can send logs collected from Google Cloud Application Engine, Google Cloud IAM, and Google Cloud Audit.

However, this is not recommended since you cannot define specific Source Category values to each GCP service. If you create a GCP Source for each service you can define a specific Source Category to each service.

This Source will be a Google Pub/Sub-only Source, which means that it will only be usable for log data formatted as data coming from Google Pub/Sub.

- In Sumo Logic select Manage Data > Collection > Collection.

- Select an existing Hosted Collector upon which to add the Source. If you don't already have a Collector you'd like to use, create one, using the instructions on Configure a Hosted Collector.

- Click Add Source next to the Hosted Collector and click Google Cloud Platform.

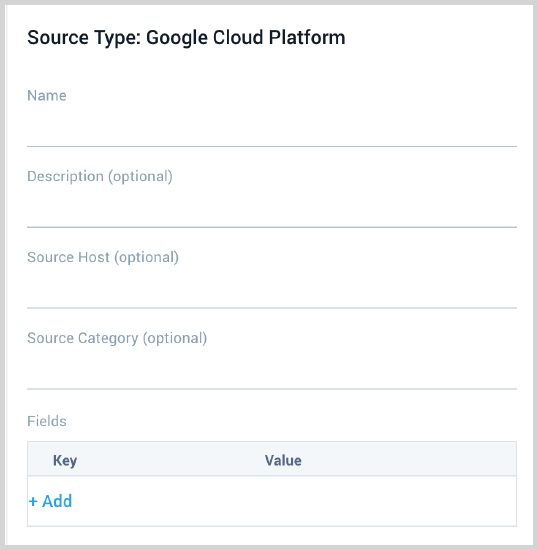

- Enter a Name to display for the Source. A Description is optional.

- Source Host (Optional). The Source Host value is tagged to each log and stored in a searchable metadata field called _sourceHost. Avoid using spaces so you do not have to quote them in keyword search expressions. This can be a maximum of 128 characters.

- Source Category (Optional). The Source Category value is tagged to each log and stored in a searchable metadata field called

_sourceCategory. See our Best Practices: Good Source Category, Bad Source Category. Avoid using spaces so you do not have to quote them in keyword search expressions. This can be a maximum of 1,024 characters. - Fields. Click the +Add Field link to add custom log metadata Fields, then define the fields you want to associate. Each field needs a name (key) and value. Look for one of the following icons and act accordingly:

If an orange triangle with an exclamation point is shown, use the option to automatically add or enable the nonexistent fields before proceeding to the next step. The orange icon indicates that the field doesn't exist, or is disabled, in the Fields table schema. If a field is sent to Sumo that does not exist in the Fields schema or is disabled it is ignored, known as dropped.

If a green circle with a checkmark is shown, the field exists and is already enabled in the Fields table schema. Proceed to the next step.

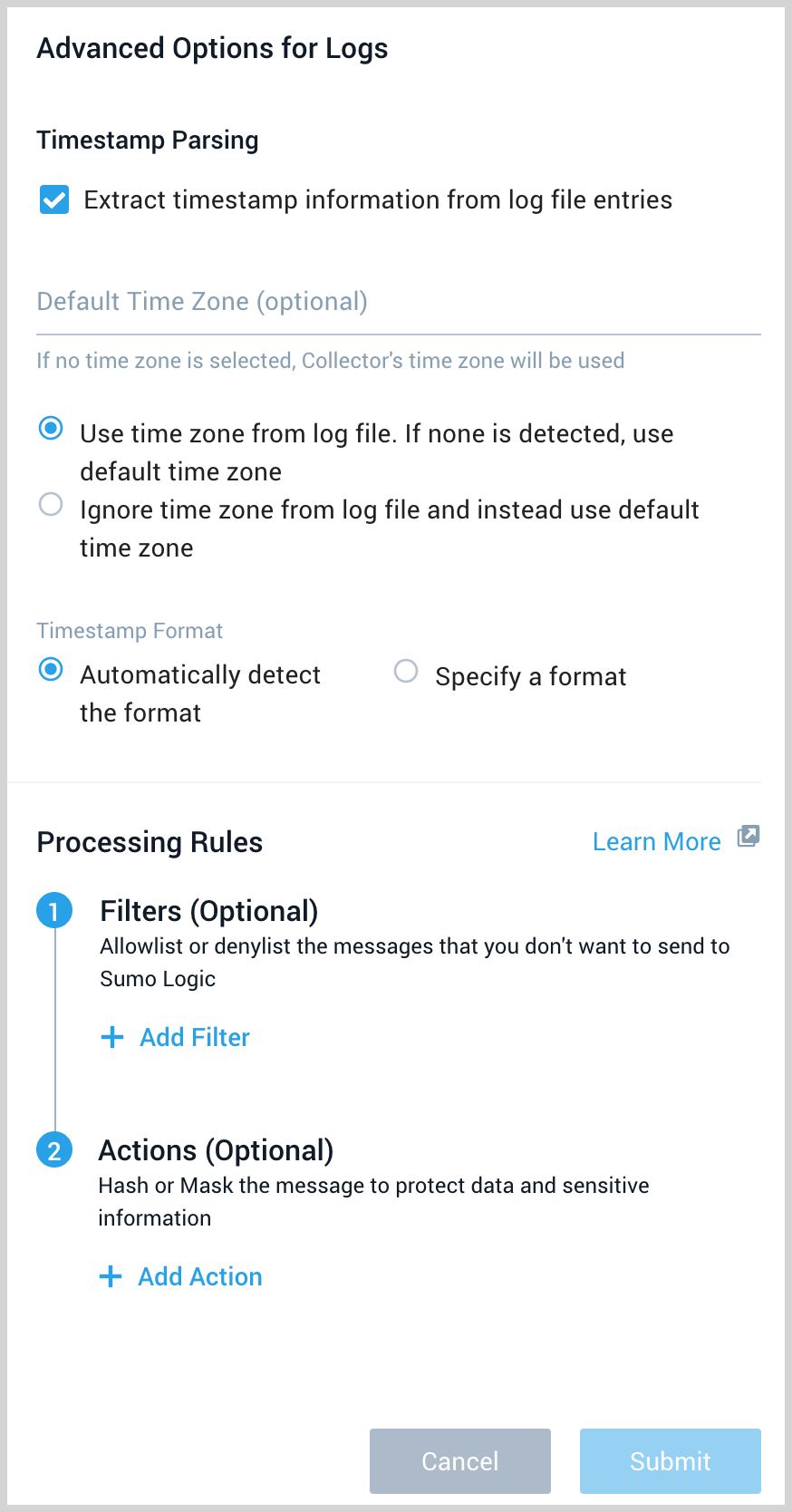

- Advanced Options for Logs.

- Timestamp Parsing. This option is selected by default. If it's deselected, no timestamp information is parsed at all.

- Time Zone. There are two options for Time Zone. You can use the time zone present in your log files, and then choose an option in case time zone information is missing from a log message. Or, you can have Sumo Logic completely disregard any time zone information present in logs by forcing a time zone. It's very important to have the proper time zone set, no matter which option you choose. If the time zone of logs can't be determined, Sumo Logic assigns logs UTC; if the rest of your logs are from another time zone your search results will be affected.

- Timestamp Format. By default, Sumo Logic will automatically detect the timestamp format of your logs. However, you can manually specify a timestamp format for a Source. See Timestamps, Time Zones, Time Ranges, and Date Formats for more information.

- Processing Rules. Configure any desired filters, such as allowlist, denylist, hash, or mask, as described in Create a Processing Rule.

- When you are finished configuring the Source, click Save.

Configure a Pub/Sub Topic for GCP

You need to configure a Pub/Sub Topic in GCP and add a subscription to the Source URL that belongs to the Sumo Logic Google Cloud Platform Source you created. Once you configure the Pub/Sub, you can export data from Google Logging to the Pub/Sub. For example, you can export Google App Engine logs, as described on Collect Logs for Google App Engine.

- Create a Pub/Sub Topic in GCP. See Google Cloud documentation for the latest configuration steps.

- Create a Pub/Sub subscription to the Source URL that belongs to the Sumo Logic Google Cloud Platform Source you created. See Google Cloud documentation for the latest configuration steps.

- Use a Push Delivery Method to the Sumo Logic Source URL. To determine the URL, navigate to the Source on the Collection page in Sumo Logic and click Show URL.

Limitations

Google limits the volume of data sent from a Topic. Our testing resulted in the following data limits:

| Topics | Megabytes per second | Payload size |

|---|---|---|

| One | 18 MBps (1.5 TB/day) | 100 KB |

| One | 6 MBps (0.5 TB/day) | 2.5 KB |

These limits may vary based on your setup and are based on our previous tests.

We recommend the following:

- Shard messages across topics within the above data limits.

- Ask GCP to increase the allowable capacity for the topic.

Create export of Google BigQuery logs from Google Logging

In this step you export logs to the Pub/Sub topic you created in the previous step.

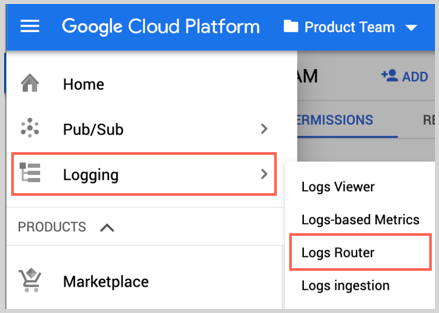

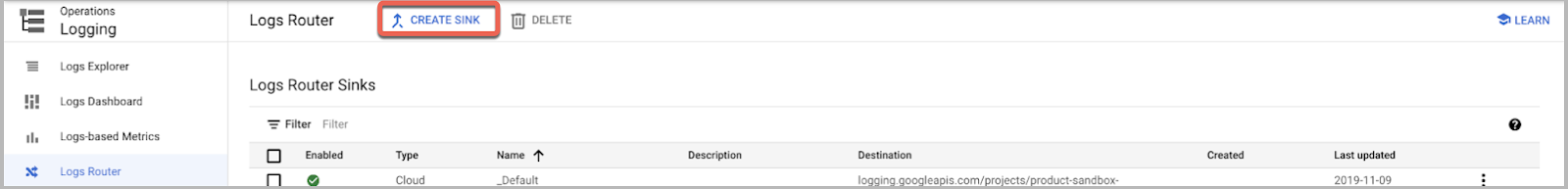

- Go to Logging and click Logs Router.

- Click Create Sink.

- As part of Create logs routing sink, add the following information.

- Enter a Sink Name. For example, "gce-vm-instance".

- Select "Cloud Pub/Sub" as the Sink Service.

- Set Sink Destination to the Pub/Sub topic you created in the Google Cloud Platform Source procedure. For example, "pub-sub-logs".

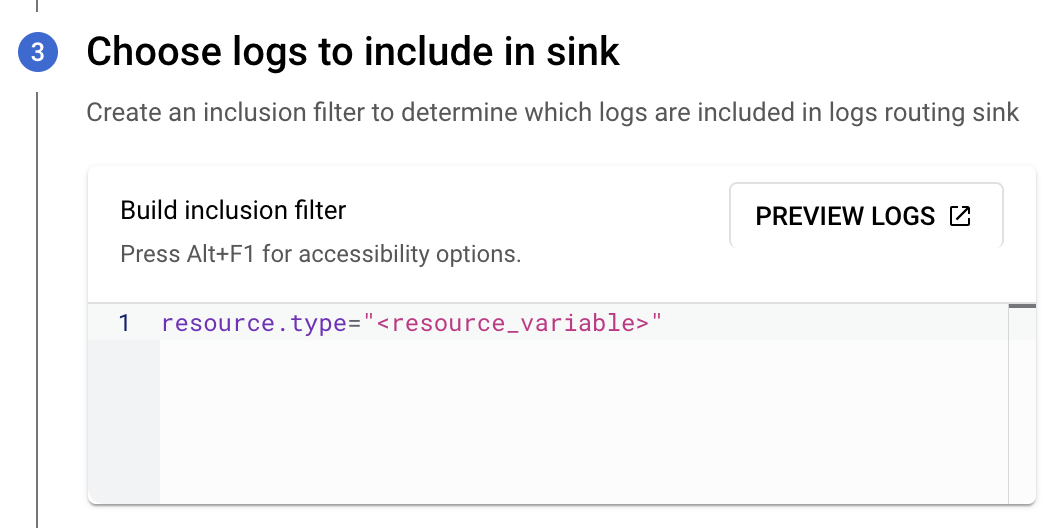

- In Choose logs to include in sink section for resource_type, replace "

<resource_variable>" with "bigquery_resource".

- Click Create Sync.

Installing the Google BigQuery App

Now that you have set up log collection, you can install the Google BigQuery App to use the pre-configured searches and dashboards that provide visibility into your environment for real-time analysis of overall usage.

Locate and install the app you need from the App Catalog. If you want to see a preview of the dashboards included with the app before installing, click Preview Dashboards. To install the app:

- From the App Catalog, search for and select the app.

- Select the version of the service you're using and click Add to Library. Version selection is applicable only to a few apps currently. For more information, see the Install the Apps from the Library.

- To install the app, complete the following fields.

- App Name. You can retain the existing name, or enter a name of your choice for the app.

- Data Source. Select either of these options for the data source.

- Choose Source Category, and select a source category from the list.

- Choose Enter a Custom Data Filter, and enter a custom source category beginning with an underscore. Example: (

_sourceCategory=MyCategory).

- Advanced. Select the Location in Library (the default is the Personal folder in the library), or click New Folder to add a new folder.

- Click Add to Library.

Once an app is installed, it will appear in your Personal folder, or other folder that you specified. From here, you can share it with your organization.

Panels will start to fill automatically. It's important to note that each panel slowly fills with data matching the time range query and received since the panel was created. Results won't immediately be available, but with a bit of time, you'll see full graphs and maps.

Viewing Google BigQuery Dashboards

The Google BigQuery App comes with pre-configured searches and dashboards that provide visibility into your environment for real-time analysis of overall usage.

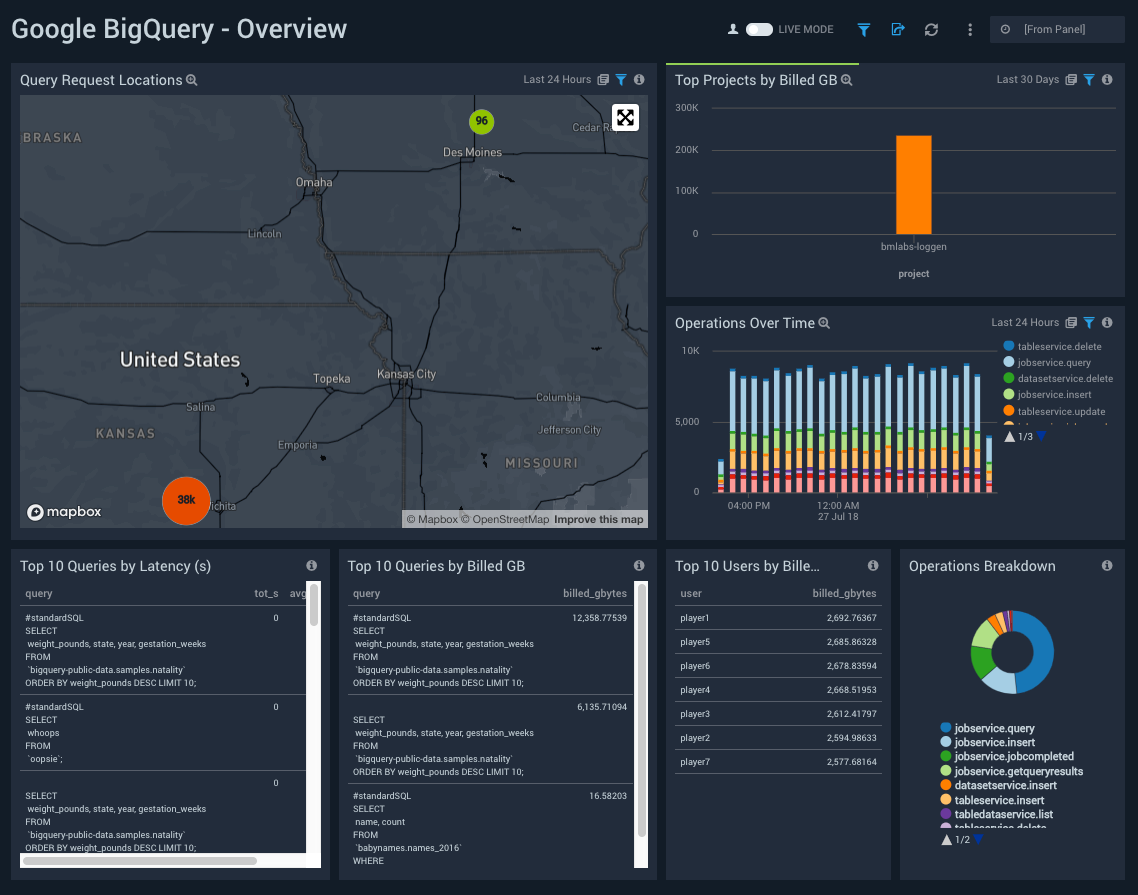

Overview

See an overview of queries, projects, and operations in Google BigQuery.

Query Request Locations. Shows the number of query requests in the last 24 hours and their location on a map.

Top Projects by Billed GB. Shows the top projects based on total billed gigabytes in the last 24 hours on a column graph.

Operations Over Time. Shows operations over time in the last 24 hours on a column graph.

Top 10 Queries by Latency(s). Shows top 10 queries based on latency in the last 24 hours on a table.

Top 10 Queries by Billed GB. Shows top 10 queries based on billed gigabytes in the last 24 hours on a table.

Top 10 Users by Billed GB. Shows top 10 users based on billed gigabytes in the last 24 hours on a table.

Operations Breakdown. Shows a count of all operations in the last 24 hours on a pie graph.

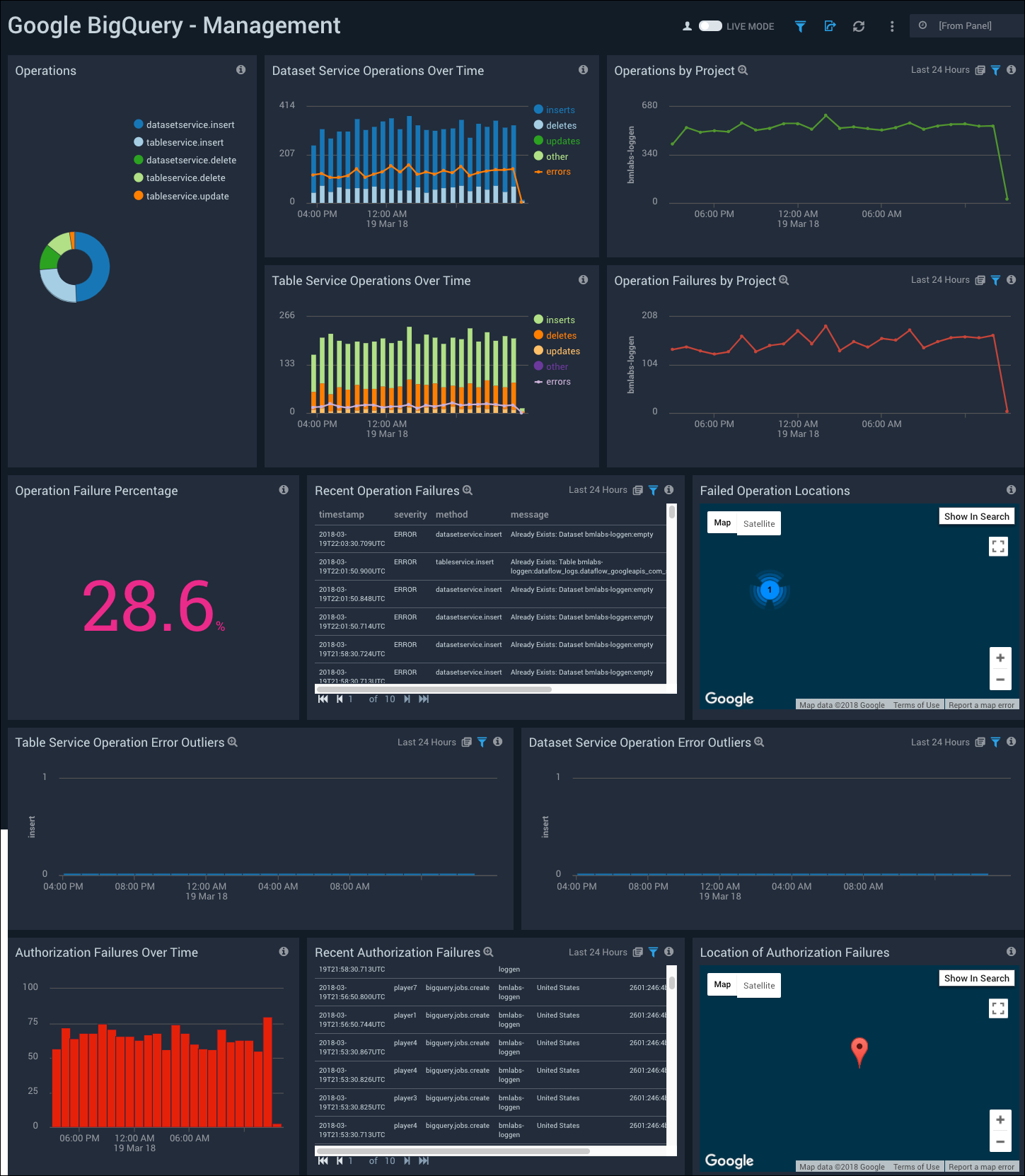

Management

See information about Google BigQuery operations, including an operations breakdown, dataset service and table service operations over time, operations and operations failures by project, location, and over time.

Operations. Shows a count of all operations in the last 24 hours on a pie graph.

Dataset Service Operations Over Time. Shows the number of dataset service operations and errors over time in the last 24 hours on a column graph.

Operations by Project. Shows trends in operations by project in the last 24 hours on a line graph.

Table Service Operations Over Time. Shows the number of table service operations and errors over time in the last 24 hours on a line graph.

Operation Failures by Project. Shows trends operation failures by project in the last 24 hours on a line graph.

Operation Failure Percentage. Shows the percentage of operations that fail in the last 24 hours.

Recent Operation Failures. Shows a table with recent operations that failed in the last 24 hours.

Failed Operation Locations. Shows the location of failed operations in the last 24 hours on a world map.

Table Service Operation Error Outliers. Shows the number of table service operation error outliers in the last 24 hours on a column graph.

Dataset Service Operation Error Outliers. Shows the number of dataset service operation error outliers in the last 24 hours on a column graph.

Authorization Failures Over Time. Shows the number of total authorization failures over time in the last 24 hours on a column graph.

Recent Authorization Failures. Shows a table with recent authorizations that failed in the last 24 hours.

Location of Authorization Failures. Shows the location of failed operations in the last 24 hours on a world map.

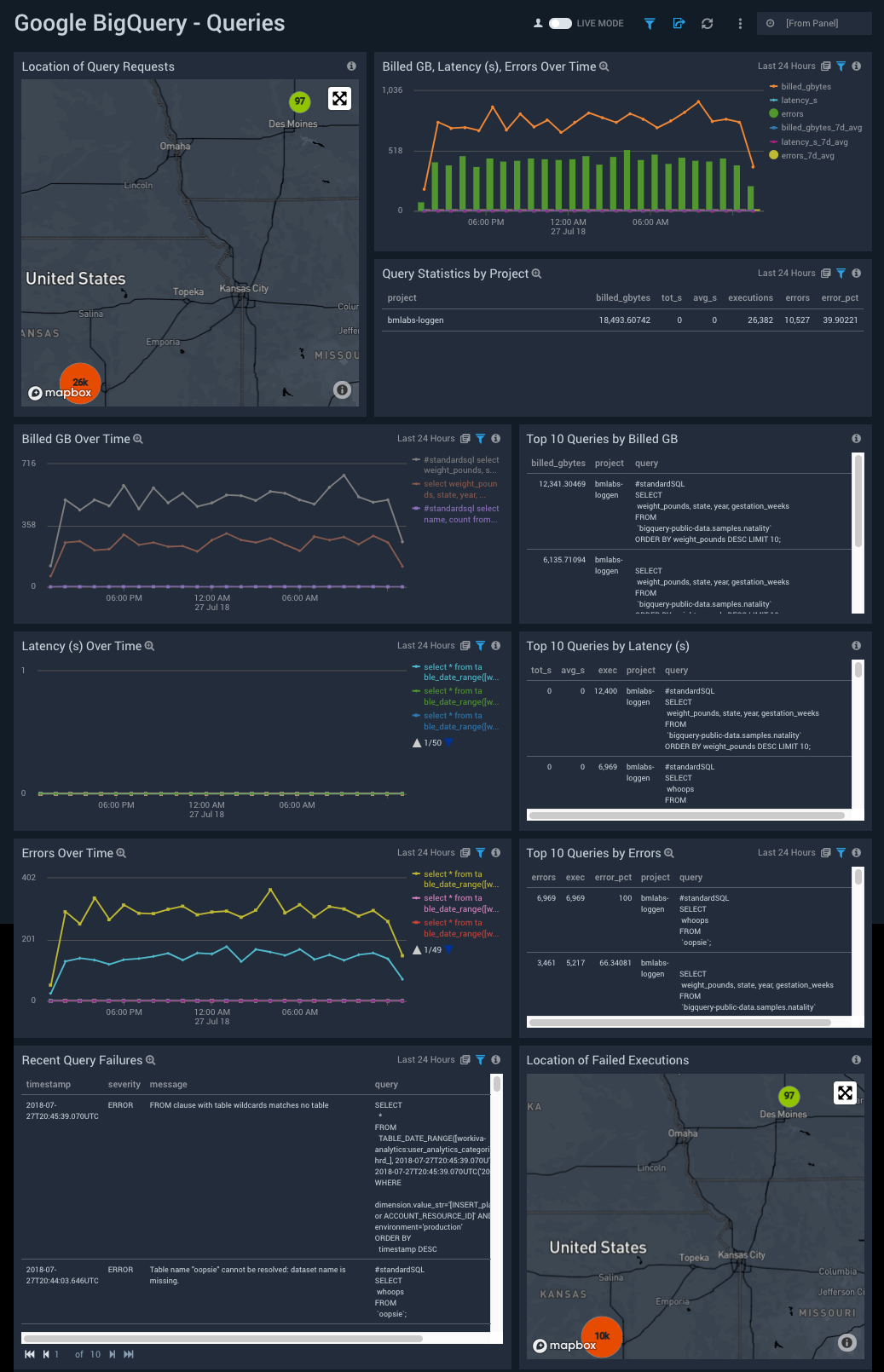

Queries

See information about queries in Google BigQuery, including billed GBs, latency, and errors.

Location of Query Requests. Shows the number of query requests in the last 24 hours and their location on a map.

Billed GB, Latency (s), Errors Over Time. Shows trends in billed gigabytes and latency over time in the last 24 hours on a line graph. Also shows the number of errors over time in the last 24 hours on a column graph.

Query Statistics by Project. Shows a table with query statistics based on projects in the last 24 hours.

Billed GB Over Time. Shows trends in billed gigabytes over time in the last 24 hours on a line graph.

Top 10 Queries by Billed GB. Shows a table with top 10 queries based on billed gigabytes in the last 24 hours.

Latency (s) Over Time. Shows trends in latency over time in the last 24 hours on a line graph.

Top 10 Queries by Latency (s). Shows a table with top 10 queries based on latency in the last 24 hours.

Errors Over Time. Shows trends in errors over time in the last 24 hours on a line graph.

Top 10 Queries by Errors. Shows a table with top 10 queries based on errors in the last 24 hours.

Recent Query Failures. Shows a table with recent query failures in the last 24 hours.

Location of Failed Executions. Shows the number of failed executions in the last 24 hours and their location on a map.

Users

See information about users in Google BigQuery, including query operations, billed GBs, query latency, and query failures by user.

Location of Users Executing Queries. Shows the number of users executing queries in the last 24 hours and their location on a map.

User Management Operations. Shows the number of user management operations in the last 24 hours on a column graph.

Top 10 Users by Query Executions. Shows a table with top 10 users based on query executions in the last 24 hours.

Top 10 Users by Billed GB. Shows a table with top 10 users based on billed gigabytes in the last 24 hours.

Top 10 Users by Latency (s). Shows a table with top 10 users based on latency in the last 24 hours.

Query Executions by User Over Time. Shows trends in query executions based on users over time in the last 24 hours on a line graph.

Billed GB by User Over Time. Shows trends in billed gigabytes based users over time in the last 24 hours on a line graph.

Latency (s) by Users Over Time. Shows trends in latency based on users over time in the last 24 hours on a line graph.

Top 10 Users by Errors. Shows a table with top 10 users based on errors in the last 24 hours.

Recent Query Failures by User. Shows a table with recent query failures in the last 24 hours.

Errors by User Over Time. Shows trends in errors based on users over time in the last 24 hours on a line graph.

Location of Users with Errors. Shows the number of users with errors in the last 24 hours and their location on a map.